Tao Hu

Computer Vision & Learning Group

Akademiestr. 7,Munich

Ludwig Maximilian University of Munich

I am a Postdoctoral Research Fellow with Björn Ommer in Ommer-Lab ( Stable Diffusion Lab ), focused on exploring the scalability and generalization ablity of diffusion model in the nxtaim project. I finished my PhD at VISLab, University of Amsterdam.

I am recruiting for Bachelor, Master and PhD supervision in Munich and globally. If you're interested in collaborating, feel free to send an email.

Open to discussion and collaboration, feel free to send an email.

Focused on introducing inductive bias into neural network to achieve data-efficiency by few-shot learning, generative model, etc. Have a conviction that generative modelling will be the future of discriminative modelling.

Publication | GitHub |

LinkedIn | Research Note | Chat with me |

news

| Aug 01, 2025 | TREAD and ArtFlow are accepted by ICCV 2025, Congrats to the team~ |

|---|---|

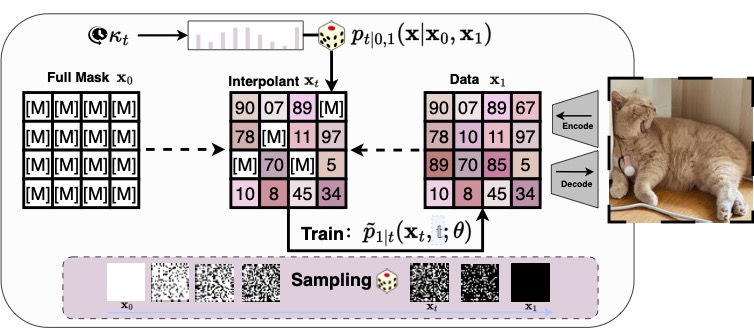

| Mar 11, 2025 | MaskFlow on arxiv |

| Mar 07, 2025 | Will give a talk at NxtAim Winter School about “Efficient Architecture for Representation”. |

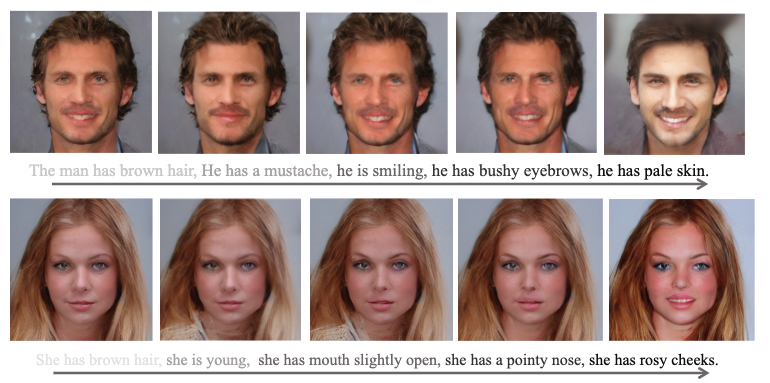

| Mar 01, 2025 | Continuous, Subject-Specific Attribute Control in T2I Models by Identifying Semantic Directions accepted by CVPR 2025. |

| Jan 23, 2025 | ToddlerDiffusion: Interactive Structured Image Generation with Cascaded Schrödinger Bridge accepted by ICLR 2025. |

selected publications

- Stochastic Interpolants for Revealing Stylistic Flows across the History of ArtIn ICCV , 2025